Cinestar Sneak Preview Dataminer

Motivation

After I graduated school in June 2018 I had a lot of free time and some friends told me about the Sneak Preview offered at my local cinema where you could watch an unknown, not yet released movie for about 5€.

Since I did not have that much to do that summer we went a few times and saw some okay and some bad movies but I still thought of the concept as interesting.

Because I always wanted to experiment with data science and machine learning but never found an interesting data source I got an idea. What about using the website of the cinema which lists all previous movies together with some more info from themoviedb to try and get some interesting insights into the choice of these sneak previews and maybe even predict future movies.

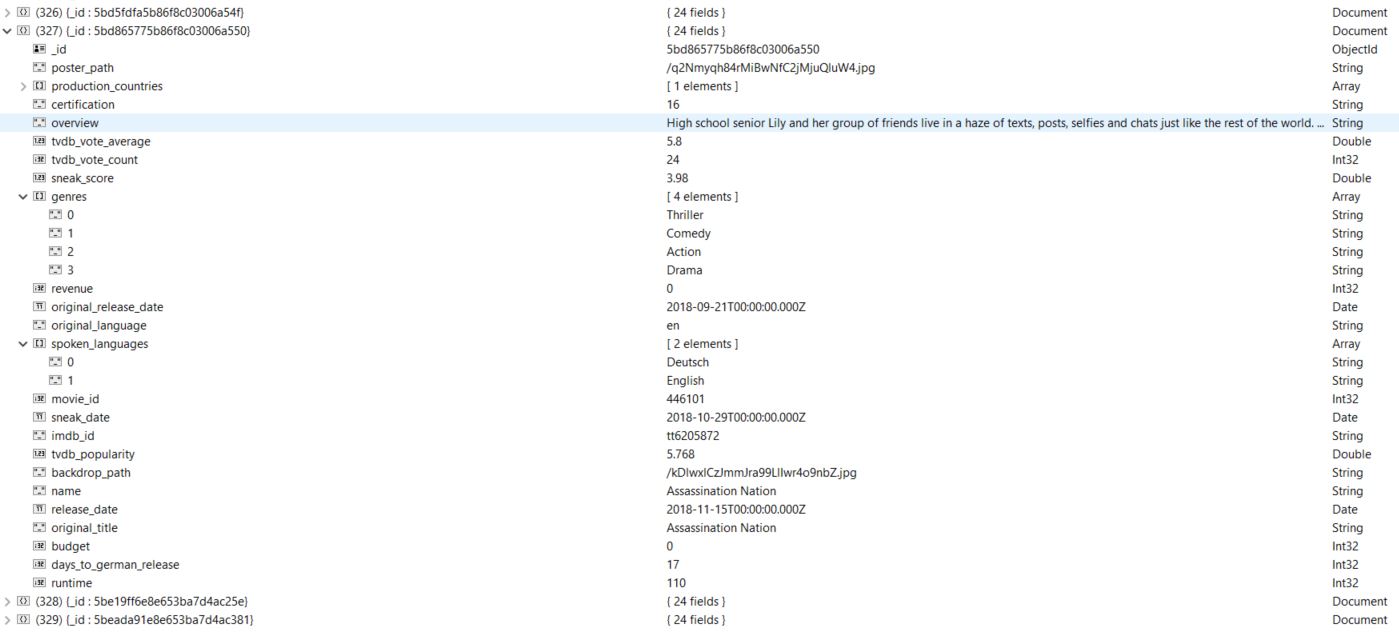

Before I could do all this futuristic data science I still had to have the data ready to use so the first step had to be getting the movies from the website, collecting info like official release date, age restriction etc from themoviedb API and saving it into a database.

Hence this project was born.

How it works

- Gets the history of previous movies website

- Parses the html

- Searches for the movie titles in themoviedb.org API

- Uses the info from the API (if found) and saves it together with name,date and review score in cinema

Code

Unfortunately I can’t publish the code yet since I wasn’t smart enough to keep API keys and similiar out of the repo.

Future plans

- Maybe I will implement proper error managment with a service like datadog and use slack for notifying me on errors and successfull execution similar to my mensa datamining project

- Another plan is to deploy this and my other datamining scripts to AWS Lambda and utilize planned execution there instead of running them with cron on a RaspberryPi to have optimal reliability and no cost with such low execution time

The whole idea of collecting all this information is to later help me use real world data in some data science experiments and maybe try some machine learning models with this data.

There probably won’t be any real use of collecting this data but it’s fun to use technology to find out some more about the real world and maybe even use it to predict/enhance some things via e.g. machine learning.

I even played a bit with things like plotting the data in scatterplots, linear plots and using some features with sklearn. Because of the trial and error method with no real thought about the information value of every feature I did not find any meaningful result yet.

And even if it turns out to not be useful at all it I had fun doing it and learned some things about web scraping, REST APIs and database clients eg MongoDB in python.